In this section, we will first define the problem statement for extreme classification, then provide details on our proposed model, and finally discuss on the objective function and evaluation metrics.

1. Experimental Setup

We conducted our experiments on a custom sensor image dataset characterized by extreme class imbalance, where one class is heavily underrepresented. A sample of sensor board images are shown in Fig. 2. To evaluate the effectiveness of our proposed method, we conducted the series of ablation studies to understand effect of individual component to propose the optimal architecture. All models were implemented using the TensorFlow deep learning library and trained on a single 12 GB NVIDIA TITAN Xp GPU.

Sensor Board Dataset. (a)-(b) Good sensor images, (c)-(d) Defective sensor board images, (e)-(f) vertical and horizontal flip transformations of defective sensor board image (c).

Our sensor dataset consists of two classes: 998 good images and 35 defective images, with the defective class as the minority. This imbalance reflects real-world manufacturing scenarios, where machines predominantly produce good sensors and rarely generate defective ones. However, deep learning models are often biased toward the majority class, resulting in poor predictions for the minority class. Therefore, our experiments aimed to mitigate this bias and improve model performance and generalization. To address the class imbalance, we designed two cases:

$\textbf{ㆍCase I:}$ The original, highly imbalanced dataset was used to without any modification to perform extreme class classification.

$\textbf{ㆍCase II:}$ Data augmentation techniques, including horizontal and vertical flips, were applied to defective class to increase the sample size. This approach partially bridged the gap between the two classes and allowed us to explore the impact of balancing the dataset.

For both cases, the dataset was split into approximately 80% for training and 20% for testing. In Case I, 800 good images and 28 defective images were allocated for training, while the remaining 198 good images and 7 defective images were used for testing. In Case II, the defective class was augmented, increasing its training set to 80 defective images and its testing set to 35 defective images, while the good images remained the same as in Case I.

To address class imbalance, we selected horizontal and vertical flips as augmentation techniques because they preserve defect characteristics while increasing diversity in the minority class. Since defects on sensor boards are often orientation-invariant, these transformations expose the model to variations without introducing unrealistic distortions. Unlike geometric transformations such as rotation or color alterations, flipping ensures that defect patterns remain realistic while expanding the dataset.

All models were trained using the Adaptive Moment Estimation (Adam) optimizer with a learning rate of $1\times 10^{-3}$ and a batch size of 32, employing the binary cross-entropy loss function.

2. Evaluation Metrics

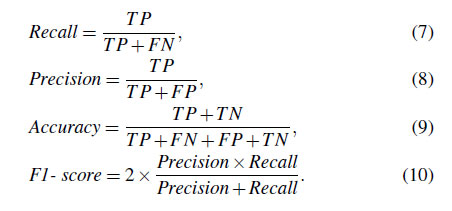

This paper addresses the challenge of classifying highly imbalanced datasets with a significantly underrepresented minority class. To evaluate model performance, we employ threshold-based metrics, including accuracy, recall, precision, and F1-score. Here, minority class recall corresponds to the true positive rate (TPR), while majority class recall corresponds to the true negative rate (TNR); further details can be found in Table 2.

In defect detection, the choice of evaluation metrics is critical for real-world manufacturing decisions. Although accuracy measures overall correctness across all classes, it can be misleading in imbalanced scenarios since a model that predicts only the majority class may still achieve high accuracy but miss most defects. Recall, on the other hand, is vital because it measures how many actual defects are correctly identified; missing a single defect can have severe cost, safety, or reliability implications. Precision complements recall by indicating how many predicted defects are genuinely defective, thus minimizing false positives that could disrupt manufacturing or re-inspection efforts. Finally, the F1-score harmonizes recall and precision, balancing the need to catch every defect with the need to avoid excessive false alarms. Focusing on these metrics--especially recall and F1-score--ensures the model robustly identifies defects without overwhelming production lines with unnecessary rechecks, ultimately supporting a more reliable and efficient defect detection process. The mathematical definitions of these metrics are as follows:

3. XCNet Implementation

The overall framework of XCNet is illustrated in Fig. 1. The model processes sensor input with a resolution of $H\times W$ and $C$ channels, where $H=W=224$ and $C=3$. The input image passes through a feature extraction network comprising five convolutional blocks, each consisting of a 2D convolutional layer with a $3 \times 3$ filter, a dilation rate of $1 \times 1$, and zero padding to preserve spatial dimensions. Each convolutional layer is followed by a ReLU [

26] activation function and a MaxPooling2D layer with a $2 \times 2$ filter size, which reduces the spatial resolution of the feature map by half along both the height and width axes while doubling the number of channels. After feature extraction, the output feature map has dimensions $\frac{H}{32}\times \frac{W}{32}\times 512$.

A GlobalAveragePooling2D (GAP) layer is then applied to aggregate spatial information into a single value per channel, resulting in a 512-dimensional feature vector. This layer enables the network to focus on the presence of features rather than their spatial location, reduces computational cost, and mitigates overfitting compared to flattening layers. The GAP output is passed to a Multi-Layer Perceptron block comprising three fully connected layers with output sizes of 256, 128, and 10, respectively. Each layer is followed by a ReLU activation and a Dropout layer with a rate of 20% to regularize the model by reducing overfitting. Finally, the MLP block output is passed through a classifier layer, a fully connected layer with an output size of 2 (one for each class), which employs a softmax activation function to generate a probability distribution. The class with the highest probability is selected as the predicted output for the given image.

4. Result Analysis on XCNet

In this section, we first investigate the impact of each component of the XCNet model on classification performance, followed by an analysis of how the quality of training data influences model effectiveness.

Ablation study on number of convolution blocks and filter configuration. Here, “AN” denotes the analysis number, “Param

(M)” represents the model parameters in millions, and “Inf. (ms)” indicates the inference speed in milliseconds. “Acc.” stands

for accuracy, while “Pre.” refers to precision.

4.1 Ablation study

The ablation study presented in Table 3 investigates

the impact of varying the number of convolutional blocks

and filter configurations on classification performance under

different levels of class imbalance. Additionally, it

provides a comprehensive analysis of computational efficiency,

including model complexity (parameter count and

GFLOPs) and inference speed, critical factors for realtime

defect detection in industrial settings. The dataset

consists of two training ratios, 1:10 and 1:50, representing

different levels of minority class underrepresentation.

Four architectures were explored with convolutional

blocks configured as [32, 64, 128], [32, 64, 128, 256], [16,

32, 64, 128, 256], and [32, 64, 128, 256, 512]. These configurations

progressively increase network depth and filter

sizes, enhancing feature extraction and enabling finer defect

detection. Larger filters improve receptive fields, capturing

structural variations in sensor board defects while

maintaining computational efficiency.

For the 1:10 ratio, deeper architectures, such as AN3

([16, 32, 64, 128, 256]) and A.N. 4 ([32, 64, 128,

256, 512]), significantly outperformed shallower configuration.

These models achieved high accuracy (99.09%

and 99.55%, respectively) and precision (100%), while

also improving recall for the minority class (90.90% and 95.45%). The F1-scores of these configurations (95.23%

and 95.45%) highlight their ability to balance sensitivity

and precision effectively. Conversely, shallower architectures,

like A.N. 1 ([32, 64, 128]), show significant drop

in recall, achieving only 40.90% and F1-score of 58.05%,

despite maintaining high overall accuracy (94.09%).

For the 1:50 ratio scenario, the performance gap between

shallow and deep architectures became even more

visible due to the severe class imbalance. A.N. 5 ([32, 64,

128]) struggled, with a minority class recall of 21.05% and

an F1-score of 33.33%, demonstrating its limitations in

handling extreme imbalance. In contrast, the A.N. 8 ([32,

64, 128, 256, 512]) architecture achieved the better performance,

with an accuracy of 98.61%, precision (100%),

and an F1-score of 91.42%. This configuration showed

substantial improvements in recall (84.21%), indicating its

effectiveness in capturing minority class instances even in

highly imbalanced scenarios.

Computational efficiency and scalability. Shallower

models (A.N. 1, A.N. 5) have fewer parameters (0.2 M)

and lower FLOPs (1.18 G) but struggle to capture

minority-class instances effectively. Deeper architectures

generally require more resources but substantially improve

recall. For example, A.N. 3 retains the same 1.0 M

parameters like A.N. 2 but reduces FLOPs (0.90 G

vs. 1.75 G) and inference time (3.75 ms vs. 4.65 ms),

while achieving better performance due to enhanced feature

extraction. Building on this, A.N. 4, which employs

larger convolutional filters, has 4.0 M parameters and

2.31 G FLOPs, but maintains an inference time of 5.63 ms,

making it a viable option for real-time applications while

delivering the highest recall and F1-score.

These findings confirm that increasing the network

depth and filter size improves performance in heavily imbalanced scenarios but also offer competitive inference

speeds, making deeper architectures like A.N. 4 and

A.N. 8 suitable for real-world manufacturing environments

where real-time defect detection is critical.

4.2 Analysis of data distribution on model performance

This section provides an in-depth analysis of how variations

in the composition of training data affect the performance

metrics of the model in two cases, Case I and

Case II. In Case I, the model is trained with the original

data distribution, while in Case II, the minority class sample

is augmented. Details of the dataset are discussed in

Subsection 4.1.

Fig. 3 shows four graphs, each illustrates the performance

metrics (accuracy, precision, recall and F1-Score)

for both cases, plotted against the fraction of the total

good-class training images. The x-axis represents the fraction

of 800 good-class images used for training, while the

number of minority-class images is fixed at 28 for Case I

and 80 for Case II. The testing dataset remains constant in

both cases. This comparative evaluation demonstrates the significant impact of data distribution, particularly the size

of the minority class, on model performance.

Fig. 3(a) illustrates the accuracy metrics used to analyze

the impact of data distribution on model performance.

In both cases, the model’s accuracy progressively

improves as the fraction of good-class training data increases.

However, Case II starts with a higher initial accuracy

(approximately 80%) and reaches near-perfect accuracy

(100%) much faster than Case I. This rapid improvement

can be attributed to the larger minority-class size in

Case II, which mitigates class imbalance and allows the

model to effectively learn the dominant class, even with

limited good-class training data. In contrast, the improvement

in Case I is slower, likely due to its smaller minority

class, which creates a greater imbalance and requires

additional good-class training data to achieve comparable

accuracy. Overall, Case II demonstrates a clear advantage

in accuracy across all training levels, highlighting the critical

role of managing class distributions to optimize model

performance.

As shown in Fig. 3(b), precision follows a similar trend,

with Case II achieving near 100% precision early, whereas Case I exhibits a more gradual increase. The superior performance

of Case II can be attributed to its larger minorityclass

size, which helps reduce overall class imbalance.

This improved balance enables the model to effectively

minimize false positives, even with limited good-class

training data. In contrast, Case I, with its smaller minority

class, struggles to achieve comparable precision under

similar conditions.

Fig. 3(c) highlights model performance in terms of recall

stability and sensitivity under different data distributions.

Unlike accuracy and precision, which show stable

and gradual improvement as training data increases, recall

behaves differently. In Case I, recall fluctuates at lower

training fractions, with noticeable dips indicating inconsistent

sensitivity in detecting the minority class. This instability

likely arises from the smaller minority-class size

in the training dataset. In contrast, recall in Case II remains

consistently high across all training fractions, demonstrating

the model’s robustness in detecting the minority class.

The larger minority class in Case II mitigates class imbalance,

ensuring that the good class receives adequate representation

during training.

Fig. 3(d) illustrates the F1-score, which combines precision

and recall into a single metric to provide a holistic

measure of the model’s classification performance. Case II

consistently outperforms Case I, with a rapid increase in

F1-score that stabilizes near 100%, even at lower fractions

of good-class training data. This superior performance

can be attributed to Case II’s larger minority-class size,

which enhances the model’s ability to distinguish between

classes and reduces the trade-off between precision and

recall. In contrast, Case I shows a slower, more gradual improvement in F1-score, remaining consistently lower

across all training levels. This slower progression reflects

challenges in balancing precision and recall caused by the

smaller minority class, which intensifies class imbalance

and limits the model’s ability to optimize performance

with less training data.

The key observations and implications are as follows:

One significant finding is the impact of minority-class size

on model performance. Case II, with a larger minorityclass

size, consistently outperforms Case I across all metrics,

demonstrating that a more balanced class distribution

improves the model’s learning and generalization. Another

important insight is the sufficiency of training data.

Case II achieves near-optimal performance with fewer

training images, highlighting the efficiency of balanced

datasets in achieving high accuracy and other key metrics

with reduced data. Both cases show performance improvements

as training data increases. However, Case II

excels in accuracy, precision, recall, and F1-score, even

with smaller training fractions. This stability underscores

the importance of balanced class distributions for reliable

and consistent model performance.

Data quality study. The plots show the effect of varying the proportion of good quality training data on (a) Accuracy, (b) Precision, (c) Recall, and (d) F1-Score for two cases: Case I (original data) and Case II (augmentation data).

4.3 Result analysis

Table 4 compares XCNet with other state-of-the-art

(SOTA) models, including VGG16 [

27], ResNet34 [

28],

ResNet50 [

28], ViT-Tiny [

29], and ViT-Base [

29], all pretrained

on ImageNet1k. While these models perform well

on large-scale datasets, they struggle to adapt to the small,

domain-specific defect dataset, particularly in cases of severe

class imbalance. ViT-Base, for instance, with 86.90

million parameters and 17.58 GFLOPs, fails to surpass XCNet’s performance, likely due to the limited training

data (< 1000 images) and the domain shift from natural

images to industrial defect images.

In contrast, XCNet delivers higher recall and F1-scores

under both 1:10 and 1:50 imbalances, attaining 99.45%

and 98.62% accuracy, respectively, while also maintaining

perfect precision (100%). From a complexity standpoint,

XCNet requires substantially fewer parameters (4.0 M)

and GFLOPs (2.31 G) than the larger SOTA models—

VGG16 with 138.36 M parameters and 15.47 GFLOPs or

ViT-Base with 86.90 M parameters and 17.58 GFLOPs.

This efficient combination of strong performance and

moderate computational requirements underscores XCNet’s

suitability for real-world manufacturing scenarios,

where data are scarce and real-time defect detection is critical.

Result Comparison with other state-of-the-art models. Here, Inference (ms) refers to model inference time in mili-seconds. Best result is shown in bold.

Training and test loss under various augmentation scenarios.

4.4 Analysis on overfitting to synthetic patterns

Figs. 4 and 5 analyze the potential risk of overfitting,

specifically whether the model learns artificial patterns

from data augmentation instead of genuine defect features.

To investigate this, we applied augmentation only to the

minority class in both the training and test datasets. Each

augmented set includes both original and modified images,

ensuring that synthetic images do not dominate the

evaluation.

In Fig. 4, the training loss (showing results from the

original training dataset; other graphs with similar trends

are omitted for clarity) remains steady and stable, while

test losses across various augmentations (e.g., flipped images)

show no abrupt spikes—indicating that the model

learns robust features rather than memorizing synthetic

patterns. Fig. 5 further underscores this robustness by

showing steady improvements in recall and F1-scores for each augmented scenario. The fact that performance consistently

increases as more augmentations are introduced

suggests that XCNet acquires generalizable defect features

rather than relying on artificially introduced cues.

This combined findings strongly support the effectiveness

of our augmentation strategy in helping XCNet learn

meaningful defect characteristics without overfitting to

synthetic patterns.

Recall and F1-Scores comparisons across different augmentation scenarios.

4.5 Adaptability to other semiconductor products

Although our work focuses on sensor board defect detection,

XCNet can easily be adapted to other semiconductor

components and industrial products. Numerous studies

confirm the versatility of CNN-based architectures for

various defect detection tasks, from wafer map analysis

[

30] to surface flaw identification [

31,

32]. Transfer learning

has further demonstrated CNN adaptability to different

data distributions [

33,

34]. Building on these findings,

XCNet’s emphasis on efficient feature extraction and robust

classification requires only minimal adjustments—

such as domain-specific data augmentation or slight architectural

tweaks—to detect defects across diverse industrial

contexts. This adaptability highlights XCNet’s potential to

make a broader impact on semiconductor inspection and

defect detection in a wide range of manufacturing scenarios.